Introduction to AGI and AI

Artificial Intelligence (AI) represents a broad field of computer science that is concerned with creating systems capable of performing tasks that would typically require human intelligence. These tasks include problem-solving, understanding language, recognizing patterns, and making decisions. Within this expansive domain, various subfields have emerged, each focusing on different approaches to machine intelligence. Machine learning, natural language processing, and computer vision are just a few examples that illustrate the versatility and application of AI technologies in contemporary society.

In contrast, Artificial General Intelligence (AGI) refers to a hypothetical type of AI that possesses the ability to understand, learn, and apply knowledge across a wide range of tasks at a level comparable to that of a human. While most current AI systems are specialized and designed for specific tasks, AGI aims to emulate the cognitive abilities of a human being, allowing for seamless transition between different domains of knowledge and experience. This distinction is crucial, as it highlights the limitations of existing AI technologies and underscores the ambitious pursuit of creating systems that possess true general intelligence.

Understanding these technologies is vital in today’s rapidly advancing digital landscape. The historical development of AI can be traced back several decades, with significant milestones marking its evolution from simple rule-based systems to more sophisticated models powered by deep learning algorithms. As AI continues to develop, the implications of achieving AGI cannot be underestimated. The potential benefits range from revolutionizing industries and enhancing productivity to raising ethical concerns regarding job displacement and decision-making autonomy. Therefore, as we explore the realms of AI and AGI, it becomes increasingly important to grasp their foundational concepts and stay informed about their ongoing advancements and societal impacts.

Natural Language Processing (NLP)

Natural Language Processing (NLP) is a vital domain within the broader field of Artificial Intelligence (AI) that focuses on the interplay between computers and human language. The primary objective of NLP is to enable machines to understand, interpret, and respond to human language in a manner that is both meaningful and contextually appropriate. This complex area incorporates various subfields, including linguistic analysis, machine learning, and computational linguistics, making advancements in NLP increasingly pertinent in today’s technology-driven world.

One of the key concepts in NLP is tokenization, which breaks down text into manageable components, such as words and phrases. This process allows subsequent algorithms to process the information gathered from these linguistic units more effectively. Coupled with morphologic analysis, which examines the structure of words, tokenization forms the foundation of many NLP applications, such as sentiment analysis, named entity recognition, and language translation. These technologies not only facilitate instant communication but also enhance user experiences across various platforms.

Real-world applications of NLP are abundant and span numerous industries. For instance, virtual assistants like Siri and Alexa rely on NLP to comprehend and respond to voice commands effectively. Additionally, chatbots utilize NLP algorithms to provide customer support, enabling businesses to automate interactions while maintaining a conversational tone. Text analysis tools, powered by NLP, offer valuable insights by assessing public opinions on social media or surveying customer feedback.

Prominent NLP tools include Google’s BERT (Bidirectional Encoder Representations from Transformers) and spaCy, both of which are well-regarded for their capabilities in understanding context and nuances in human language. As NLP continues to evolve, its integration into AI systems will be fundamental in shaping future ways of communication and information processing.

Computer Vision

Computer Vision is a pivotal discipline within the broader field of Artificial Intelligence (AI) that focuses on enabling computers to interpret and understand visual information from the world. Through sophisticated algorithms and models, computer vision systems process and analyze images or video feeds, allowing machines to perform tasks such as object recognition, image segmentation, and motion tracking. One of the core technologies behind computer vision is image recognition, which utilizes deep learning techniques to classify and identify objects within digital images. This process generally involves training neural networks on large datasets, allowing them to learn features and patterns that distinguish one object from another.

In addition to image recognition, computer vision encompasses a range of techniques including image processing, where algorithms enhance, manipulate, and analyze visual data to extract meaningful information. For instance, edge detection algorithms help identify boundaries within images, while image filtering techniques can reduce noise and enhance visual clarity. These methodologies are increasingly being integrated into various applications across diverse industries. For example, in the healthcare sector, computer vision is employed to analyze medical images, facilitating precise diagnoses through automated identification of tumors and other abnormalities.

The automotive industry has also recognized the potential of computer vision, with self-driving cars relying heavily on these technologies for navigation and obstacle detection. Furthermore, retail businesses leverage computer vision to monitor customer behaviors, manage inventory, and enhance shopping experiences through technologies such as facial recognition and augmented reality overlays. As advancements in computers’ visual understanding continue to evolve, it becomes clear that computer vision is not just a subset of AI but a core component driving the future of numerous sectors. The continual development of this technology illustrates its transformative impact on how machines perceive the world and interact with it.

Robotics and the Integration of AI

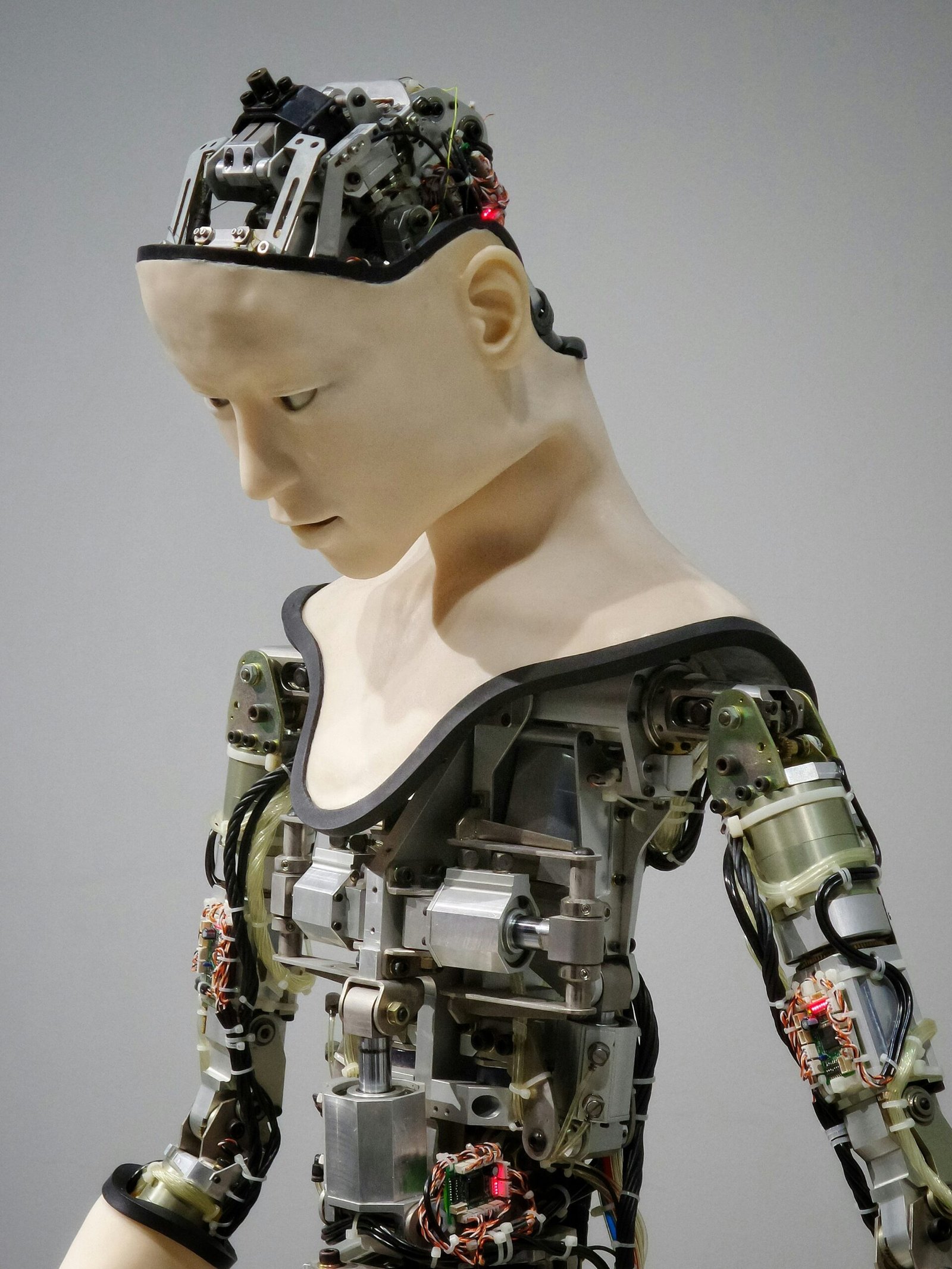

Robotics has significantly evolved in recent years, propelled by advancements in artificial intelligence (AI). This integration allows machines to execute tasks autonomously, transforming traditional robotics into intelligent systems capable of learning and adapting to dynamic environments. The fusion of AI with robotics facilitates the development of various types of robots that serve diverse functions across numerous industries, including manufacturing, healthcare, and service sectors.

One prominent type of robot is the industrial robot, primarily used in manufacturing environments for tasks such as welding, assembly, and packaging. These robots benefit from AI by improving efficiency and precision through machine learning algorithms that optimize operation strategies over time. Similarly, autonomous mobile robots (AMRs) have emerged as significant players in logistics and warehouse management, navigating complex environments by utilizing AI algorithms for real-time decision-making.

In the healthcare sector, robots equipped with AI functionalities are making substantial strides. Surgical robots improve precision in operations, while social robots assist in therapy and eldercare, providing companionship and monitoring health conditions. This application showcases how intelligent systems enhance not only productivity but also the well-being of individuals, demonstrating the versatility of AI in robotics.

Looking toward the future, the potential for AI-driven robotics appears limitless. Innovations such as collaborative robots (cobots) signify a trend where machines and humans work alongside each other, enhancing productivity and safety in the workplace. Furthermore, advancements in AI technology, such as reinforcement learning and natural language processing, are expected to push the boundaries of robotic capabilities even further.

In conclusion, the integration of artificial intelligence in robotics is revolutionizing various sectors by enhancing the functionality and efficiency of robots. As intelligent systems continue to develop, the collaborative potential between humans and machines is likely to unlock new opportunities, paving the way for a future where robotics plays an even more significant role in our daily lives.

Machine Learning

Machine learning is a pivotal subset of artificial intelligence (AI) that enables systems to learn from data, adapt, and improve performance without explicit programming. Through the utilization of various algorithms, machine learning allows computers to recognize patterns, make predictions, and enhance operational efficiency across a wide range of applications. The primary types of algorithms in machine learning can be categorized into supervised, unsupervised, and reinforcement learning.

In supervised learning, algorithms are trained on labeled datasets, which means the input data is paired with the correct output. This method is widely used in applications such as image recognition, speech recognition, and predictive analytics. Conversely, unsupervised learning involves training on unlabelled data, where the system identifies hidden patterns or intrinsic structures. Clustering techniques, such as K-means and hierarchical clustering, are frequently employed in this context to group similar data points. Lastly, reinforcement learning uses a reward-based system to teach models how to make decisions by receiving feedback from their actions.

The significance of data quality cannot be overstated in the realm of machine learning. High-quality, clean, and relevant data significantly enhances the performance of algorithms. Data preparation, including cleaning, normalization, and structuring, is crucial for improving model accuracy. In various sectors such as finance, healthcare, and marketing, the applications of machine learning are transformative. For instance, machine learning models can analyze large volumes of data quickly to detect fraudulent transactions in real-time or optimize supply chain logistics through predictive modeling.

Visual aids, such as diagrams illustrating the workflow of different machine learning processes, can further illuminate this complex field. These visuals help demystify the intricacies involved in training models and highlight the step-by-step progression from data collection to deployment. Through a deeper understanding of machine learning, individuals and organizations can harness its potential to drive innovation and efficiency in their operations.

Deep Learning

Deep Learning, a subset of Machine Learning, has gained immense popularity due to its remarkable ability to process and analyze extensive datasets through complex architectures known as neural networks. Unlike traditional Machine Learning algorithms, which often require manual feature extraction, deep learning models autonomously learn features from raw data. This ability enables the extraction of intricate patterns that would be nearly impossible for humans to design upfront.

At the core of deep learning are neural networks, which consist of interconnected nodes or “neurons.” These networks are structured in layers, with each layer transforming the input data through mathematical functions. The most common types of neural networks include feedforward neural networks, convolutional neural networks (CNNs), and recurrent neural networks (RNNs). CNNs, particularly, have revolutionized the field of computer vision, enabling groundbreaking advancements in image and video analysis. RNNs are known for their application in natural language processing, where they efficiently handle sequential data.

The training process of these neural networks involves feeding them vast amounts of data and adjusting their parameters to minimize the error in their predictions. This training is typically conducted using gradient descent optimization methods, which iteratively refine the weights and biases of the model. High-performance computing plays a crucial role in this process, as the complexity of deep learning models often demands significant computational resources. Utilizing graphics processing units (GPUs) and specialized hardware accelerators, researchers can train deep learning models much faster than with conventional computing methods.

Several successful applications of deep learning demonstrate its efficacy across various sectors. For instance, in healthcare, deep learning is used for diagnostic imaging analysis, aiding in the early detection of diseases. In the automotive industry, autonomous driving systems leverage deep learning algorithms to interpret sensor data and navigate environments safely. These examples underscore the transformative potential of deep learning, establishing it as a vital component of the broader Artificial Intelligence landscape.

Ethics and AI Governance

The rapid advancements in Artificial Intelligence (AI) and its burgeoning sibling, Artificial General Intelligence (AGI), have prompted critical conversations surrounding ethics and governance. As these technologies increasingly permeate various aspects of society, it becomes imperative to examine the ethical implications and governance structures required to regulate their use. A primary concern in the ethical landscape of AI is the issue of bias. Algorithms can inadvertently embed existing social biases, leading to unfair treatment of certain demographic groups. This bias can manifest in pivotal areas such as hiring practices, law enforcement, and loan approvals, making it crucial for developers to ensure fairness and inclusivity within AI systems.

Accountability presents another significant challenge in the realm of AI governance. As machines take on more decision-making roles, determining responsibility for erroneous outcomes becomes more complex. If an AI system causes harm or makes a flawed decision, the question arises: who is accountable? This dilemma necessitates the establishment of clear guidelines that delineate the responsibilities of AI developers, users, and organizations. Moreover, the potential job displacement caused by the rise of AI calls for urgent discussions on how best to transition workers into new roles. Policymakers, educators, and industry leaders must collaborate to devise strategies that equip the workforce with the necessary skills for an evolving job landscape.

The societal impact of AI technologies is another crucial area that demands thoughtful consideration. As these technologies evolve, their influence on privacy, security, and personal autonomy becomes increasingly pronounced. Ongoing efforts are being made globally to establish ethical guidelines and frameworks for the responsible development and deployment of AI and AGI. Initiatives such as the Partnership on AI and the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems seek to address these concerns and provide a roadmap for ethical AI governance. By promoting transparency, accountability, and ethical considerations, these initiatives aim to foster a sustainable future where AI technologies benefit all of humanity.

Applications of AGI and AI in Various Industries

Artificial Intelligence (AI) and Artificial General Intelligence (AGI) are rapidly transforming numerous industries through their innovative applications, reshaping processes and enhancing efficiency. In the healthcare sector, AI technologies such as machine learning algorithms and natural language processing are being employed to analyze vast datasets, improving diagnostic accuracy and patient care. For instance, predictive analytics tools can identify patient risks and personalize treatment plans, thus enhancing overall health outcomes.

In finance, AI is utilized in algorithmic trading, fraud detection, and credit risk assessment. Financial institutions are increasingly relying on AI-powered systems to analyze customer behavior, predict market trends, and detect anomalies in transaction patterns. These technologies not only streamline operations but also facilitate informed decision-making, driving profitability while ensuring compliance with regulatory standards.

The automotive industry has also seen a significant impact from AGI and AI applications, particularly in the development of autonomous vehicles. AI systems are engineered to process real-time data from various sensors, enabling vehicles to operate safely without human intervention. Furthermore, AI-driven analytics optimize supply chain management, predicting demand fluctuations and enhancing inventory control.

In the education sector, AI-powered learning management systems offer personalized educational experiences tailored to individual student needs. These systems analyze learning patterns and provide targeted resources, making education more accessible and effective. Additionally, chatbots and virtual tutors are increasingly deployed to assist students in mastering complex subjects.

While the deployment of AI technologies brings numerous benefits across these industries, challenges such as data privacy concerns, ethical considerations, and workforce displacement must be carefully addressed. As organizations venture into the realm of AGI and AI, striking a balance between innovation and responsible implementation remains paramount.

Future Trends in AGI and AI

The landscape of Artificial General Intelligence (AGI) and Artificial Intelligence (AI) is continuously evolving, driven by rapid technological advancements and growing societal interest. As we look toward the next decade, several key trends are expected to emerge, shaping the trajectory of these fields. First and foremost, the integration of AI into everyday life is set to expand significantly. As AI systems become more sophisticated, their applications will permeate various sectors, including healthcare, finance, transportation, and education. This increase in integration will not only enhance productivity but will also lead to more personalized experiences for users.

In addition to practical applications, the ethical considerations surrounding AGI and AI will gain prominence. As these technologies evolve, it will be crucial to address concerns related to data privacy, bias, and decision-making accountability. Organizations will likely invest in developing frameworks that ensure ethical AI development while fostering transparency and building public trust. This focus on ethics will be complemented by ongoing discussions about regulatory measures aimed at guiding the responsible use of AI technologies.

Another anticipated trend is the advancement of AGI capabilities, as researchers aim to create systems that can perform a wide range of tasks with human-like understanding. This drive towards more generalized intelligence will leverage breakthroughs in machine learning and neuro-inspired architectures. As the boundary between narrow AI and AGI blurs, we may witness systems that not only learn from vast datasets but also exhibit common sense reasoning and emotional intelligence.

Lastly, public acceptance and societal readiness will play a crucial role in shaping the future of AGI and AI. As these technologies become more ubiquitous, engaging diverse stakeholders in conversations about their implications will be essential. By fostering collaborative efforts among technologists, ethicists, and policymakers, society can better navigate the complexities of AI integration and maximize its benefits while mitigating potential risks.

Conclusion and Call to Action

Throughout this exploration of Artificial General Intelligence (AGI) and Artificial Intelligence (AI), we have highlighted the significant distinctions and intersections between these two transformative technologies. AGI represents a level of intelligence typified by a system’s ability to perform any intellectual task that a human can do, while AI, in its current iteration, focuses on specialized problem-solving capabilities. The advancements in AI demonstrate extraordinary potential in various sectors, but it is crucial to remain cognizant of the ongoing discussions surrounding AGI, as its development could redefine our understanding of machines and intelligence itself.

The implications of these technologies extend to numerous domains, including healthcare, finance, and education, creating both opportunities and challenges. As stakeholders in these fields, it is imperative to stay informed about the latest developments in AGI and AI. Continuous education will empower us to contribute to the discourse, ensuring we approach these innovations ethically and responsibly. Consider subscribing to reputable journals, attending workshops, or participating in online forums dedicated to AGI and AI advancements. Engaging in conversations with peers can offer valuable insights and stimulate further exploration into this fascinating subject.

We encourage our readers to delve deeper into the nuances of AGI and AI, not just as passive consumers of knowledge, but as active participants in discussions that shape the future of technology. By broadening our understanding, we can collectively navigate the complex landscape of intelligent systems. The future holds immense potential, and by sharing knowledge and insights, we can foster a more informed community prepared to embrace the changes that AGI and AI will undoubtedly bring.